“The days of giving digital a pass are over. It’s time to grow up.”

-Marc Pritchard, Chief Branding Officer, Procter & Gamble, January 2017

When the CBO of P&G tells us to grow up, we listen. And after speaking with clients at last month’s Media Insights Conference, it’s clear that there’s consensus: online advertising research needs to get more sophisticated.

We’re here to help. IAB breaks research down into phases: design, recruitment & deployment, and optimization. We’ll walk through each phase and determine what’s most in need of “growing up.” We’ll also include questions to ask your research partner to help increase the sophistication of your ad effectiveness research.

Design

Let’s start by acknowledging that statistically sound online ad effectiveness research has not been easy to implement at reasonable cost until recently. As IAB notes, “Questions around recruitment, sample bias and deployment are hampering the validity of this research and undermining the industry as a whole.”

Just because perfect research design is challenging to achieve doesn’t mean that advertisers should settle for studies with debilitating flaws, leading to biased, unreliable results. In addition to challenges inherent to good research design, most ad effectiveness research partners have systematic biases due to the way they find respondents, which must be accounted for in the design phase. There has been innovation in this space within the past year using technology to reduce or eliminate systematic bias in respondent recruitment.

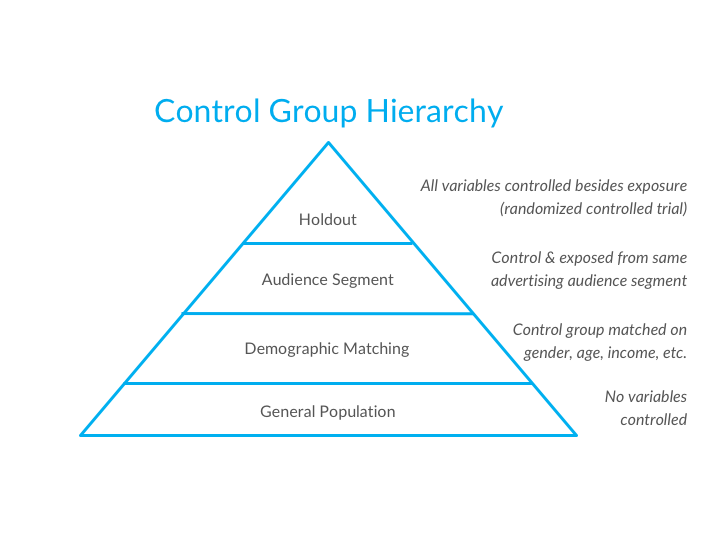

Assuming you’re able to address the systematic bias of your research partner’s sampling, the major remaining challenge is how you approach the control group. At Upwave, we think about this as a hierarchy:

Using a holdout group is best practice, but implementing it requires spending some portion of your ad budget strictly on the control group. In other words, some of your ad budget will be spent on intentionally NOT showing people an ad. A small portion of people in the ad buy will instead be shown public service announcements to establish the control group. We love the purity of this approach, but we also understand the reality of advertising budgets. We don’t view holdout as a requirement for sound online ad effectiveness research. Smart design combined with technology can achieve methodologically sound control groups without “wasting” ad budget.

Along those lines, the Audience Segment approach has become de facto best practice for many of our clients. Basically, you create your control group from the same audience segment that you’re targeting in the ad buy. This isn’t perfect, as there could be an underlying reason that some people in the segment saw the ad but others didn’t (e.g., some people very rarely go online, or to very few websites), but it’s still an excellent approach. It’s the grown-up version of Demographic Matching.

Demographic Matching, in which the control group is created by matching as many demographic variables as possible with the exposed group (e.g., gender, age, income), is still a very common strategy. It’s straightforward to accomplish even using old online research methodologies. As online data has allowed us to learn far more useful information about consumers than demographic traits, this approach is dated.

Simply sampling GenPop as a control is undesirable. The results are much more likely to reveal the differences between the exposed and control groups than the effectiveness of the advertising.

Questions for your research partner

- What are known biases among respondents due to recruitment strategy?

- What is your total reach? What percentage of the target group is within your reach? Is it necessary to weight low-IR population respondents due to lack of scale?

- What’s your approach to creating control groups for online ad effectiveness research?

- For Demographic Matching, how do you determine which demographic characteristics are most important to match?

- How do you accomplish Audience Segment matching?

Recruitment/ Deployment

Historically, there were four methods to recruit respondents / deploy the survey: panels, intercepts, in-banner, or email list. To stomach these methodologies, researchers had to ignore one of the following flaws: non-response bias, misrepresentation, interruption of the customer experience or email list atrophy. In our view, these methodologies are now dated since the advent of the publisher network methodology.

The publisher network works by offering consumers content, ad-free browsing, or other benefits (e.g. free Wi-Fi) in exchange for taking a survey. The survey is completed as an alternative to paying for the content or service after the consumer organically visits the publisher. In addition to avoiding the flaws of the old methodologies, the publisher network model provides dramatically increased accuracy, scale, and speed.

Questions for your research partner

- What incentives are offered in exchange for respondent participation?

- What are the attitudinal, behavioral, and demographic differences between someone willing to be in a panel versus someone not interested in being in a panel?

- What are the attitudinal, behavioral, and demographic differences between someone willing to take a site intercept survey versus someone not interested in taking a site intercept survey?

- How much does non-response bias affect the data?

- Are you integrated with the client’s DMP?

- How long to get the survey into the field, and how long until completed?

- How does the vendor ensure that exposure bias doesn’t occur?

- How does the vendor account for straight-liners, speeders, and other typical data quality issues?

Optimization

An optimal ad effectiveness campaign returns results quickly, so that immediate and continuous adjustments can be made to replace poorly performing creative, targeting, and placements with higher performing ones. We call this real-time spend allocation. It’s analogous to real-time click-through rate optimization, as it relies on solutions to the same math problem (known as the multi-armed bandit).

By integrating with DMPs, ad effectiveness research can be cross-tabbed against even more datasets. The results will yield additional insights about a company’s existing customers.

Questions for your research partner

- Are results reported real-time?

- How much advertising budget is wasted due to non-optimization?

- How can DMP data be incorporated to improve ad research?

Conclusion

Flawed research methodologies can’t grow up, they can only continue to lower prices for increasingly suspect data. For online ad effectiveness research to grow up, new methodologies must be adopted.