We’ve all caught a snippet on our favorite morning info-tainment talk show declaring, “New study finds drinking a glass of wine as good for you as an hour at the gym” or “Latest scientific research shows eating chocolate causes weight loss.” You probably rolled your eyes and thought, “where do they come up with these ridiculous findings?” You’re right to be skeptical but, in fact, many are ostensibly validated using a time-honored measurement of statistical significance using p-values. Time-honored no more, though.

You’ll be comforted to know the scientific community is skeptical not just of the findings, but of the methodology as well. Whether it’s a cohort of 800 scientists advocating against p-values or the American Statistical Association itself jettisoning the term “statistical significance,” experts agree that it’s time to stop relying on a metric that came about over a century ago.

This conversation isn’t just limited to the scientific research world. P-values and statistical significance is still the most common tool used to validate advertising campaigns. So why aren’t we applying the same critical lens to “statistically significant” campaign reports that we do to eye-roll-inducing research studies? As with science, it’s time for the advertising industry to move on, too.

What is statistical significance, exactly?

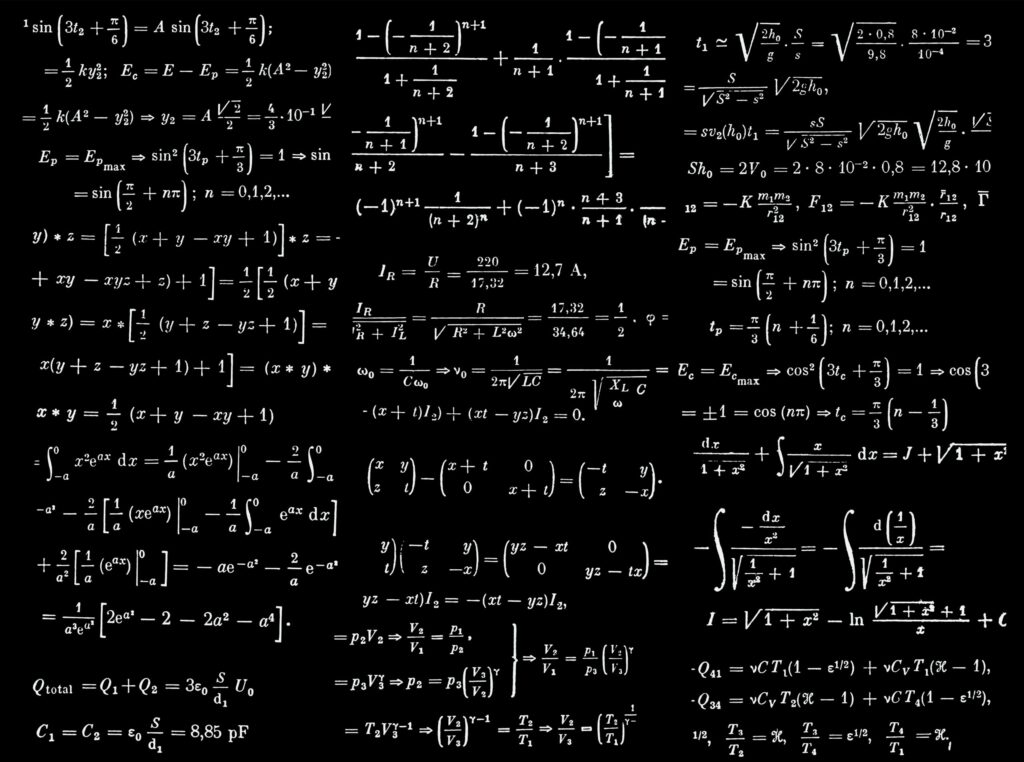

Statistical significance is based on a statistic – the p-value – that is used as an indicator of how likely it is that a study’s results could be replicated. This calculation allows researchers to evaluate the validity of the results from a scientific study after it has finished. Results with a p-value of less than 0.05 are generally considered “statistically significant.” The words themselves imply the results must be, well, significant. In reality, achieving “statistical significance” simply means the outcome is not likely due to random chance.

What is the problem?

While it is clearly important to determine if an outcome isn’t due to chance, the mis-use of statistical significance is so rampant that its value has been eroded.

For starters, if a study has enough data, some correlation will always show statistically significant results. Exploiting this is a practice commonly known as “p-hacking,” where a researcher will find any correlation in a study whose p-value indicates statistical significance, and claim it as a defensible finding. The practice of “p-hacking” – intentionally or not – is frequently responsible for those headline-making study findings that contradict common sense.

Second, when statistical significance is evaluated repeatedly in a dynamic environment, the chances of a false positive or false negative increases quickly. Assuming that early results in a test will hold until the end can lead to disastrous consequences.

What this means for brand advertisers

As we’ve explored before, the issues with statistical significance are compounded for brand advertisers when used to measure campaign performance.

Advertising campaigns operate in a multi-faceted, dynamic environment where data is constantly being collected. Campaign tactics need to be measured and adjusted mid-flight to improve performance, but checking for statistical significance repeatedly during a campaign virtually guarantees false positives. In turn, acting on those false positives will decrease campaign performance and have real financial repercussions.

Setting aside the risk of false positives, the assumption is often that if a tactic’s performance is statistically significant, then you can be confident it is outperforming tactics with lower results. In reality, though, a stat sig result only means the success of an individual tactic itself was not likely due to random chance. It gives no indication of performance compared to other tactics – yet, that’s exactly what you need to know when optimizing mid-flight. Given that reality, it is imperative for brand advertisers to employ a reliable statistic capable of identifying the relative performance of tactics and accounting for the constant influx of campaign data.

Takeaways

The American Statistical Association urges that scientific researchers no longer use p-values in isolation to determine whether a result is real. The same should be true in ad measurement. Brand advertisers should shift away from legacy metrics like p-values that are only useful for post-campaign reporting and instead use a metric specifically designed for mid-campaign optimization.

The Upwave Outperformance Indicator was built for this purpose: combining observed lift and confidence levels into a single, sortable metric to reveal which tactics – from publisher to audience to channel and more – are most likely to improve or reduce overall performance while the campaign is still in-flight. With the Upwave Optimization dashboard, advertisers can confidently update stakeholders mid-campaign and optimize before it’s too late.